EfficientNet¶

EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks

Use Grid search to find the best combination of alpha, beta and gamma for EfficientNet-B1, as discussed in Section 3.3 in paper. Search space, tuner, configuration examples are provided here.

Instructions¶

Set your working directory here in the example code directory.

Run

git clone https://github.com/ultmaster/EfficientNet-PyTorchto clone the ultmaster modified version of the original EfficientNet-PyTorch. The modifications were done to adhere to the original Tensorflow version as close as possible (including EMA, label smoothing and etc.); also added are the part which gets parameters from tuner and reports intermediate/final results. Clone it intoEfficientNet-PyTorch; the files likemain.py,train_imagenet.shwill appear inside, as specified in the configuration files.Run

nnictl create --config config_local.yml(useconfig_pai.ymlfor OpenPAI) to find the best EfficientNet-B1. Adjust the training service (PAI/local/remote), batch size in the config files according to the environment.

For training on ImageNet, read EfficientNet-PyTorch/train_imagenet.sh. Download ImageNet beforehand and extract it adhering to PyTorch format and then replace /mnt/data/imagenet in with the location of the ImageNet storage. This file should also be a good example to follow for mounting ImageNet into the container on OpenPAI.

Results¶

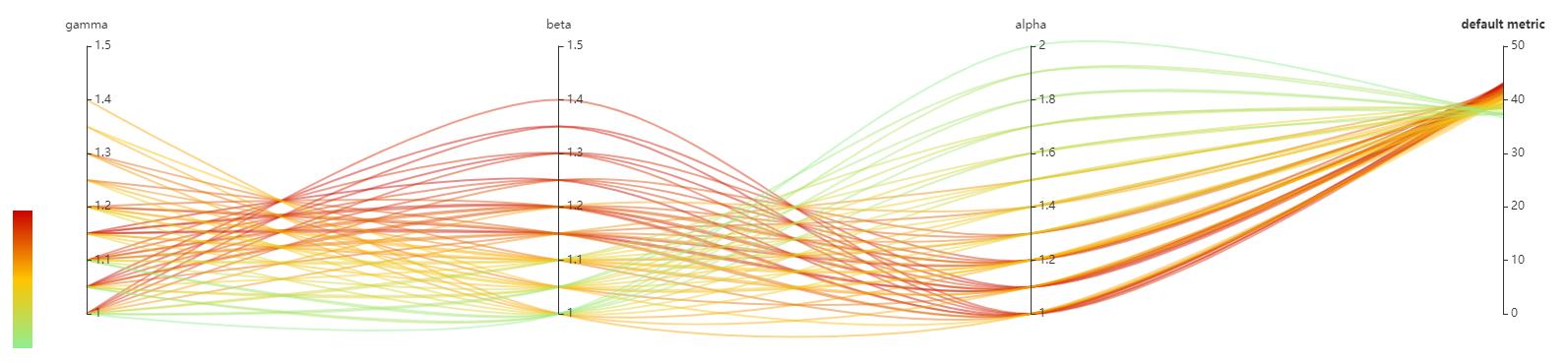

The follow image is a screenshot, demonstrating the relationship between acc@1 and alpha, beta, gamma.