NAS API Reference¶

Model space¶

- class nni.nas.nn.pytorch.LayerChoice(*args, **kwargs)[source]¶

Layer choice selects one of the

candidates, then apply it on inputs and return results.It allows users to put several candidate operations (e.g., PyTorch modules), one of them is chosen in each explored model.

New in v2.2: Layer choice can be nested.

- Parameters:

candidates (list of nn.Module or OrderedDict) – A module list to be selected from.

weights (list of float) – Prior distribution used in random sampling.

label (str) – Identifier of the layer choice.

- length¶

Deprecated. Number of ops to choose from.

len(layer_choice)is recommended.- Type:

int

- names¶

Names of candidates.

- Type:

list of str

- choices¶

Deprecated. A list of all candidate modules in the layer choice module.

list(layer_choice)is recommended, which will serve the same purpose.- Type:

list of Module

Examples

# import nni.nas.nn.pytorch as nn # declared in `__init__` method self.layer = nn.LayerChoice([ ops.PoolBN('max', channels, 3, stride, 1), ops.SepConv(channels, channels, 3, stride, 1), nn.Identity() ]) # invoked in `forward` method out = self.layer(x)

Notes

candidatescan be a list of modules or a ordered dict of named modules, for example,self.op_choice = LayerChoice(OrderedDict([ ("conv3x3", nn.Conv2d(3, 16, 128)), ("conv5x5", nn.Conv2d(5, 16, 128)), ("conv7x7", nn.Conv2d(7, 16, 128)) ]))

Elements in layer choice can be modified or deleted. Use

del self.op_choice["conv5x5"]orself.op_choice[1] = nn.Conv3d(...). Adding more choices is not supported yet.- property candidates: Dict[str, Module] | List[Module]¶

Restore the

candidatesparameters passed to the constructor. Useful when creating a new layer choices based on this one.

- class nni.nas.nn.pytorch.InputChoice(*args, **kwargs)[source]¶

Input choice selects

n_choseninputs fromchoose_from(containsn_candidateskeys).It is mainly for choosing (or trying) different connections. It takes several tensors and chooses

n_chosentensors from them. When specific inputs are chosen,InputChoicewill becomeChosenInputs.Use

reductionto specify how chosen inputs are reduced into one output. A few options are:none: do nothing and return the list directly.sum: summing all the chosen inputs.mean: taking the average of all chosen inputs.concat: concatenate all chosen inputs at dimension 1.

We don’t support customizing reduction yet.

- Parameters:

n_candidates (int) – Number of inputs to choose from. It is required.

n_chosen (int) – Recommended inputs to choose. If None, mutator is instructed to select any.

reduction (str) –

mean,concat,sumornone.weights (list of float) – Prior distribution used in random sampling.

label (str) – Identifier of the input choice.

Examples

# import nni.nas.nn.pytorch as nn # declared in `__init__` method self.input_switch = nn.InputChoice(n_chosen=1) # invoked in `forward` method, choose one from the three out = self.input_switch([tensor1, tensor2, tensor3])

- class nni.nas.nn.pytorch.Repeat(*args, **kwargs)[source]¶

Repeat a block by a variable number of times.

- Parameters:

blocks (function, list of function, module or list of module) – The block to be repeated. If not a list, it will be replicated (deep-copied) into a list. If a list, it should be of length

max_depth, the modules will be instantiated in order and a prefix will be taken. If a function, it will be called (the argument is the index) to instantiate a module. Otherwise the module will be deep-copied.depth (int or tuple of int) –

If one number, the block will be repeated by a fixed number of times. If a tuple, it should be (min, max), meaning that the block will be repeated at least

mintimes and at mostmaxtimes. If a ValueChoice, it should choose from a series of positive integers.New in version 2.8: Minimum depth can be 0. But this feature is NOT supported on graph engine.

Examples

Block() will be deep copied and repeated 3 times.

self.blocks = nn.Repeat(Block(), 3)

Block() will be repeated 1, 2, or 3 times.

self.blocks = nn.Repeat(Block(), (1, 3))

Can be used together with layer choice. With deep copy, the 3 layers will have the same label, thus share the choice.

self.blocks = nn.Repeat(nn.LayerChoice([...]), (1, 3))

To make the three layer choices independent, we need a factory function that accepts index (0, 1, 2, …) and returns the module of the

index-th layer.self.blocks = nn.Repeat(lambda index: nn.LayerChoice([...], label=f'layer{index}'), (1, 3))

Depth can be a ValueChoice to support arbitrary depth candidate list.

self.blocks = nn.Repeat(Block(), nn.ValueChoice([1, 3, 5]))

- class nni.nas.nn.pytorch.Cell(*args, **kwargs)[source]¶

Cell structure that is popularly used in NAS literature.

Find the details in:

On Network Design Spaces for Visual Recognition is a good summary of how this structure works in practice.

A cell consists of multiple “nodes”. Each node is a sum of multiple operators. Each operator is chosen from

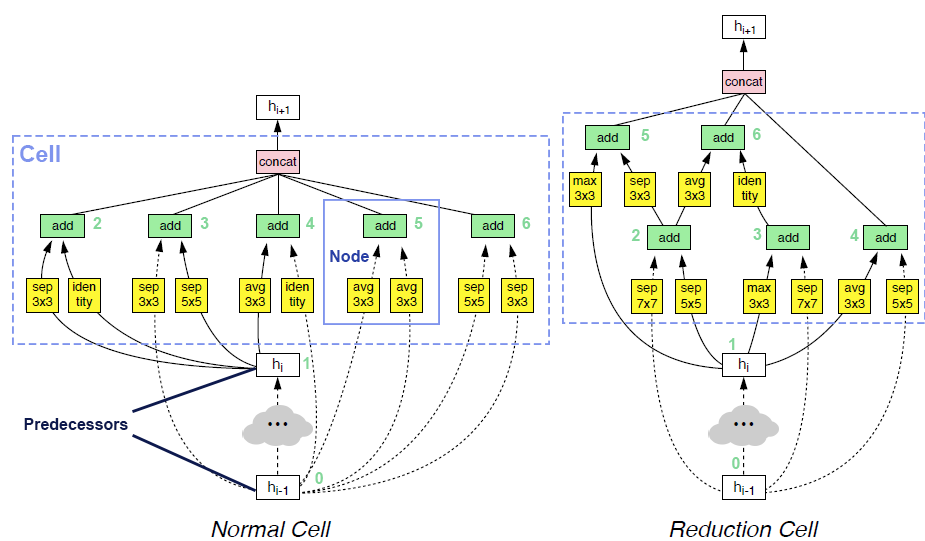

op_candidates, and takes one input from previous nodes and predecessors. Predecessor means the input of cell. The output of cell is the concatenation of some of the nodes in the cell (by default all the nodes).Two examples of searched cells are illustrated in the figure below. In these two cells,

op_candidatesare series of convolutions and pooling operations.num_nodes_per_nodeis set to 2.num_nodesis set to 5.merge_opisloose_end. Assuming nodes are enumerated from bottom to top, left to right,output_node_indicesfor the normal cell is[2, 3, 4, 5, 6]. For the reduction cell, it’s[4, 5, 6]. Please take a look at this review article if you are interested in details.

Here is a glossary table, which could help better understand the terms used above:

Name

Brief Description

Cell

A cell consists of

num_nodesnodes.Node

A node is the sum of

num_ops_per_nodeoperators.Operator

Each operator is independently chosen from a list of user-specified candidate operators.

Operator’s input

Each operator has one input, chosen from previous nodes as well as predecessors.

Predecessors

Input of cell. A cell can have multiple predecessors. Predecessors are sent to preprocessor for preprocessing.

Cell’s output

Output of cell. Usually concatenation of some nodes (possibly all nodes) in the cell. Cell’s output, along with predecessors, are sent to postprocessor for postprocessing.

Preprocessor

Extra preprocessing to predecessors. Usually used in shape alignment (e.g., predecessors have different shapes). By default, do nothing.

Postprocessor

Extra postprocessing for cell’s output. Usually used to chain cells with multiple Predecessors (e.g., the next cell wants to have the outputs of both this cell and previous cell as its input). By default, directly use this cell’s output.

Tip

It’s highly recommended to make the candidate operators have an output of the same shape as input. This is because, there can be dynamic connections within cell. If there’s shape change within operations, the input shape of the subsequent operation becomes unknown. In addition, the final concatenation could have shape mismatch issues.

- Parameters:

op_candidates (list of module or function, or dict) – A list of modules to choose from, or a function that accepts current index and optionally its input index, and returns a module. For example, (2, 3, 0) means the 3rd op in the 2nd node, accepts the 0th node as input. The index are enumerated for all nodes including predecessors from 0. When first created, the input index is

None, meaning unknown. Note that in graph execution engine, support of function inop_candidatesis limited. Please also note that, to makeCellwork with one-shot strategy,op_candidates, in case it’s a callable, should not depend on the second input argument, i.e.,op_indexin current node.num_nodes (int) – Number of nodes in the cell.

num_ops_per_node (int) – Number of operators in each node. The output of each node is the sum of all operators in the node. Default: 1.

num_predecessors (int) – Number of inputs of the cell. The input to forward should be a list of tensors. Default: 1.

merge_op ("all", or "loose_end") – If “all”, all the nodes (except predecessors) will be concatenated as the cell’s output, in which case,

output_node_indiceswill belist(range(num_predecessors, num_predecessors + num_nodes)). If “loose_end”, only the nodes that have never been used as other nodes’ inputs will be concatenated to the output. Predecessors are not considered when calculating unused nodes. Details can be found in NDS paper. Default: all.preprocessor (callable) – Override this if some extra transformation on cell’s input is intended. It should be a callable (

nn.Moduleis also acceptable) that takes a list of tensors which are predecessors, and outputs a list of tensors, with the same length as input. By default, it does nothing to the input.postprocessor (callable) – Override this if customization on the output of the cell is intended. It should be a callable that takes the output of this cell, and a list which are predecessors. Its return type should be either one tensor, or a tuple of tensors. The return value of postprocessor is the return value of the cell’s forward. By default, it returns only the output of the current cell.

concat_dim (int) – The result will be a concatenation of several nodes on this dim. Default: 1.

label (str) – Identifier of the cell. Cell sharing the same label will semantically share the same choice.

Examples

Choose between conv2d and maxpool2d. The cell have 4 nodes, 1 op per node, and 2 predecessors.

>>> cell = nn.Cell([nn.Conv2d(32, 32, 3, padding=1), nn.MaxPool2d(3, padding=1)], 4, 1, 2)

In forward:

>>> cell([input1, input2])

The “list bracket” can be omitted:

>>> cell(only_input) # only one input >>> cell(tensor1, tensor2, tensor3) # multiple inputs

Use

merge_opto specify how to construct the output. The output will then have dynamic shape, depending on which input has been used in the cell.>>> cell = nn.Cell([nn.Conv2d(32, 32, 3), nn.MaxPool2d(3)], 4, 1, 2, merge_op='loose_end') >>> cell_out_channels = len(cell.output_node_indices) * 32

The op candidates can be callable that accepts node index in cell, op index in node, and input index.

>>> cell = nn.Cell([ ... lambda node_index, op_index, input_index: nn.Conv2d(32, 32, 3, stride=2 if input_index < 1 else 1), ... ], 4, 1, 2)

Predecessor example:

class Preprocessor: def __init__(self): self.conv1 = nn.Conv2d(16, 32, 1) self.conv2 = nn.Conv2d(64, 32, 1) def forward(self, x): return [self.conv1(x[0]), self.conv2(x[1])] cell = nn.Cell([nn.Conv2d(32, 32, 3), nn.MaxPool2d(3)], 4, 1, 2, preprocessor=Preprocessor()) cell([torch.randn(1, 16, 48, 48), torch.randn(1, 64, 48, 48)]) # the two inputs will be sent to conv1 and conv2 respectively

Warning

Cellis not supported inGraphModelSpacemodel format.- output_node_indices¶

An attribute that contains indices of the nodes concatenated to the output (a list of integers).

When the cell is first instantiated in the base model, or when

merge_opisall,output_node_indicesmust berange(num_predecessors, num_predecessors + num_nodes).When

merge_opisloose_end,output_node_indicesis useful to compute the shape of this cell’s output, because the output shape depends on the connection in the cell, and which nodes are “loose ends” depends on mutation.- Type:

list of int

- op_candidates_factory¶

If the operations are created with a factory (callable), this is to be set with the factory. One-shot algorithms will use this to make each node a cartesian product of operations and inputs.

- Type:

CellOpFactory or None

- forward(*inputs)[source]¶

Forward propagation of cell.

- Parameters:

inputs (List[Tensor] | Tensor) – Can be a list of tensors, or several tensors. The length should be equal to

num_predecessors.- Returns:

The return type depends on the output of

postprocessor. By default, it’s the output ofmerge_op, which is a contenation (onconcat_dim) of some of (possibly all) the nodes’ outputs in the cell.- Return type:

Tuple[torch.Tensor] | torch.Tensor

- class nni.nas.nn.pytorch.ModelSpace(*args, **kwargs)[source]¶

The base class for model search space based on PyTorch. The out-est module should inherit this class.

Model space is written as PyTorch module for the convenience of writing code. It’s not a real PyTorch model, and shouldn’t be used as one for most cases. Most likely, the forward of

ModelSpaceis a dry run of an arbitrary model in the model space. But since there is no guarantee on which model will be chosen, and the behavior is not well tested, it’s only used for sanity check and tracing the space, and its semantics are not well-defined.Similarly for

state_dictandload_state_dict. Users should bear in mind thatModelSpaceis NOT a one-shot supernet, directly exporting its weights are unreliable and prone to error. Use one-shot strategies to mutate the model space into a supernet for such needs.Mutables in model space must all be labeled manually, unless a label prefix is provided. Every model space can have a label prefix, which is used to provide a stable automatic label generation. For example, if the label prefix is

model, all the mutables initialized in a subclass of ModelSpace (in__init__function of itself and submodules, to be specific), will be automatically labeled with a prefixmodel/. The label prefix can be manually specified upon definition of the class:class MyModelSpace(ModelSpace, label_prefix='backbone'): def __init__(self): super().__init__() self.choice = self.add_mutable(nni.choice('depth', [2, 3, 4])) print(self.choice.label) # backbone/choice

Notes

The

__init__implementation ofModelSpaceis inmodel_space_init_wrapper().

- class nni.nas.nn.pytorch.ParametrizedModule(*args, **kwargs)[source]¶

Subclass of

MutableModulesupports mutables as initialization parameters.One important feature of

ParametrizedModuleis that it automatically freeze the mutable arguments passed to__init__. This is for the convenience as well as compatibility with existing code:class MyModule(ParametrizedModule): def __init__(self, x): super().__init__() self.t = x # Will be a fixed number, e.g., 3. MyModule(nni.choice('choice1', [1, 2, 3]))

Note that the mutable arguments need to be directly posed as arguments to

__init__. They can’t be hidden in a list or dict.If users want to make a 3rd-party module parametrized, it’s recommended to do the following (taking

nn.Conv2das an example):>>> class ParametrizedConv2d(ParametrizedModule, nn.Conv2d, wraps=nn.Conv2d): ... pass >>> conv = ParametrizedConv2d(3, nni.choice('out', [8, 16])) >>> conv >>> conv.out_channels 8 >>> conv.args['out_channels'] Categorical([8, 16], label='out') >>> conv.freeze({'out': 16}) Conv2d(3, 16, kernel_size=(1, 1), stride=(1, 1))

Tip

The parametrized version of modules in

torch.nnare already provided innni.nas.nn.pytorch. Every class is prefixed withMutable. For example,nni.nas.nn.pytorch.MutableConv2d`is a parametrized version oftorch.nn.Conv2d.- args¶

The arguments used to initialize the module. Since

ParametrizedModulewill hijack the init arguments before passing to__init__, this is the only recommended way to retrieve the original init arguments back.

Warning

ParametrizedModulecan be nested. It’s also possible to put arbitrary mutable modules inside aParametrizedModule. But be careful if the inner mutable modules are dependant on the parameters ofParametrizedModule, because NNI can’t handle cases where the mutables are a dynamically changing after initialization. For example, the following snippet is WRONG:class MyModule(ParametrizedModule): def __init__(self, x): if x == 0: self.mutable = self.add_mutable(nni.choice('a', [1, 2, 3])) else: self.mutable = self.add_mutable(nni.choice('b', [4, 5, 6])) module = MyModule(nni.choice('x', [0, 1]))

- class nni.nas.nn.pytorch.MutableModule(*args, **kwargs)[source]¶

PyTorch module, but with uncertainties.

This base class provides useful tools to handle search spaces built on top of PyTorch modules, including methods like

simplify(),freeze().MutableModulecan have dangling mutables registered on it viaadd_mutable().- add_mutable(mutable)[source]¶

Register a mutable to this module. This is often used to add dangling variables that are not parameters of any

ParametrizedModule.If the mutable is also happens to be a submodule of type

MutableModule, it can be registered in the same way as PyTorch (i.e.,self.xxx = mutable). No need to add it again here.Examples

In practice, this method is often used together with

ensure_frozen().>>> class MyModule(MutableModule): ... def __init__(self): ... super().__init__() ... token_size = nni.choice('t', [4, 8, 16]) # Categorical variable here ... self.add_mutable(token_size) # Register the mutable to this module. ... real_token_size = ensure_frozen(token_size) # Real number. 4 during dry run. 4, 8 or 16 during search. ... self.token = nn.Parameter(torch.randn(real_token_size, 1))

Tip

Note that

ensure_frozen()must be used under afrozen_context(). The easiest way to do so is to invoke it within initialization of aModelSpace.Warning

Arbitrary

add_mutable()is not supported forGraphModelSpace.

- classmethod create_fixed_module(sample, *args, **kwargs)[source]¶

The classmethod is to create a brand new module with fixed architecture.

The parameter

sampleis a dict with the exactly same format assampleinfreeze(). The difference is that whencreate_fixed_module()is called, there is noMutableModuleinstance created yet. Thus it can be useful to simplify the creation of a fixed module, by saving the cost of creating aMutableModuleinstance and immediatelyfreeze()it.If automatic label generation (e.g.,

auto_label()) is used in__init__, the same number of labels should be generated in this method. Otherwise it will mess up the global label counter, and potentially affect the label of successive modules.By default, this method has a not-implemented flag, and

should_invoke_fixed_module()will returnFalsebased on this flag.

- freeze(sample)[source]¶

Return a frozen version of current mutable module. Some sub-modules can be possibly deep-copied.

If mutables are added to the module via

add_mutable(), this method must be implemented. Otherwise, it will simply look at the children modules and freeze them recursively.freeze()of subclass is encouraged to keep the original weights at best effort, but no guarantee is made, unless otherwise specified.

- mutable_descendants()[source]¶

named_mutable_descendants()without names.

- property mutables: List[Mutable]¶

Mutables that are dangling under this module.

Normally this is all the mutables that are registered via

MutableModule.add_mutable().

- named_mutable_descendants()[source]¶

Traverse the module subtree, find all descendants that are

MutableModule.If a child module is

MutableModule, return it directly, and its subtree will be ignored.If not, it will be recursively expanded, until

MutableModuleis found.

- classmethod should_invoke_fixed_module()[source]¶

Call

create_fixed_module()when fixed-arch context is detected.Typically this should be enabled. Otherwise the arch context might not be correctly handled. In cases where this flag is disabled, remember to detect arch context and manually freeze things in

__init__, or confirm that it’s a composite module and nothing needs to be frozen.By default, it returns true when

create_fixed_module()is overridden.

Model Space Hub¶

NasBench101¶

- class nni.nas.hub.pytorch.NasBench101(*args, **kwargs)[source]¶

The full search space proposed by NAS-Bench-101.

It’s simply a stack of

NasBench101Cell. Operations are conv3x3, conv1x1 and maxpool respectively.- Parameters:

stem_out_channels – Number of output channels of the stem convolution.

num_stacks – Number of stacks in the network.

num_modules_per_stack – Number of modules in each stack. Each module is a

NasBench101Cell.max_num_vertices – Maximum number of vertices in each cell.

max_num_edges – Maximum number of edges in each cell.

num_labels – Number of categories for classification.

bn_eps – Epsilon for batch normalization.

bn_momentum – Momentum for batch normalization.

NasBench201¶

- class nni.nas.hub.pytorch.NasBench201(*args, **kwargs)[source]¶

The full search space proposed by NAS-Bench-201.

It’s a stack of

NasBench201Cell.- Parameters:

stem_out_channels – The output channels of the stem.

num_modules_per_stack – The number of modules (cells) in each stack. Each cell is a

NasBench201Cell.num_labels – Number of categories for classification.

NASNet¶

- class nni.nas.hub.pytorch.NASNet(*args, **kwargs)[source]¶

Search space proposed in Learning Transferable Architectures for Scalable Image Recognition.

It is built upon

Cell, and implemented based onNDS. Its operator candidates areNASNET_OPS. It has 5 nodes per cell, and the output is concatenation of nodes not used as input to other nodes.Notes

To use NDS spaces with one-shot strategies, especially when depth is mutating (i.e.,

num_cellsis set to a tuple / list), please useNDSStagePathSampling(with ENAS and RandomOneShot) andNDSStageDifferentiable(with DARTS and Proxyless) intomutation_hooks. This is because the output shape of each stacked block inNDSStagecan be different. For example:from nni.nas.hub.pytorch.nasnet import NDSStageDifferentiable darts_strategy = strategy.DARTS(mutation_hooks=[NDSStageDifferentiable.mutate])

- Parameters:

width – A fixed initial width or a tuple of widths to choose from.

num_cells – A fixed number of cells (depths) to stack, or a tuple of depths to choose from.

dataset – The essential differences are in “stem” cells, i.e., how they process the raw image input. Choosing “imagenet” means more downsampling at the beginning of the network.

auxiliary_loss – If true, another auxiliary classification head will produce the another prediction. This makes the output of network two logits in the training phase.

drop_path_prob – Apply drop path. Enabled when it’s set to be greater than 0.

- NASNET_OPS = ['skip_connect', 'conv_3x1_1x3', 'conv_7x1_1x7', 'dil_conv_3x3', 'avg_pool_3x3', 'max_pool_3x3', 'max_pool_5x5', 'max_pool_7x7', 'conv_1x1', 'conv_3x3', 'sep_conv_3x3', 'sep_conv_5x5', 'sep_conv_7x7']¶

The candidate operations.

- class nni.nas.hub.pytorch.nasnet.NDS(*args, **kwargs)[source]¶

The unified version of NASNet search space.

We follow the implementation in unnas. See On Network Design Spaces for Visual Recognition for details.

Different NAS papers usually differ in the way that they specify

op_candidatesandmerge_op.datasethere is to give a hint about input resolution, so as to create reasonable stem and auxiliary heads.NDS has a speciality that it has mutable depths/widths. This is implemented by accepting a list of int as

num_cells/width.Notes

To use NDS spaces with one-shot strategies, especially when depth is mutating (i.e.,

num_cellsis set to a tuple / list), please useNDSStagePathSampling(with ENAS and RandomOneShot) andNDSStageDifferentiable(with DARTS and Proxyless) intomutation_hooks. This is because the output shape of each stacked block inNDSStagecan be different. For example:from nni.nas.hub.pytorch.nasnet import NDSStageDifferentiable darts_strategy = strategy.DARTS(mutation_hooks=[NDSStageDifferentiable.mutate])

- Parameters:

width – A fixed initial width or a tuple of widths to choose from.

num_cells – A fixed number of cells (depths) to stack, or a tuple of depths to choose from.

dataset – The essential differences are in “stem” cells, i.e., how they process the raw image input. Choosing “imagenet” means more downsampling at the beginning of the network.

auxiliary_loss – If true, another auxiliary classification head will produce the another prediction. This makes the output of network two logits in the training phase.

drop_path_prob – Apply drop path. Enabled when it’s set to be greater than 0.

op_candidates – List of operator candidates. Must be from

OPS.merge_op – See

Cell.num_nodes_per_cell – See

Cell.

- freeze(sample)[source]¶

Freeze the model according to the sample.

As different stages have dependencies among each other, we will recreate the whole model for simplicity. For weight inheritance purposes, this

freeze()might require re-writing.- Parameters:

sample (Dict[str, Any]) – The architecture dict.

- set_drop_path_prob(drop_prob)[source]¶

Set the drop probability of Drop-path in the network. Reference: FractalNet: Ultra-Deep Neural Networks without Residuals.

- class nni.nas.hub.pytorch.nasnet.NDSStage(*args, **kwargs)[source]¶

This class defines NDSStage, a special type of Repeat, for isinstance check, and shape alignment.

In NDS, we can’t simply use Repeat to stack the blocks, because the output shape of each stacked block can be different. This is a problem for one-shot strategy because they assume every possible candidate should return values of the same shape.

Therefore, we need

NDSStagePathSamplingandNDSStageDifferentiableto manually align the shapes – specifically, to transform the first block in each stage.This is not required though, when depth is not changing, or the mutable depth causes no problem (e.g., when the minimum depth is large enough).

Attention

Assumption: Loose end is treated as all in

merge_op(the case in one-shot), which enforces reduction cell and normal cells in the same stage to have the exact same output shape.- downsampling: bool¶

This stage has downsampling

- estimated_out_channels: int¶

Output channels of this stage. It’s estimated because it assumes

allasmerge_op.

- estimated_out_channels_prev: int¶

Output channels of cells in last stage.

ENAS¶

- class nni.nas.hub.pytorch.ENAS(*args, **kwargs)[source]¶

Search space proposed in Efficient neural architecture search via parameter sharing.

It is built upon

Cell, and implemented based onNDS. Its operator candidates areENAS_OPS. It has 5 nodes per cell, and the output is concatenation of nodes not used as input to other nodes.Notes

To use NDS spaces with one-shot strategies, especially when depth is mutating (i.e.,

num_cellsis set to a tuple / list), please useNDSStagePathSampling(with ENAS and RandomOneShot) andNDSStageDifferentiable(with DARTS and Proxyless) intomutation_hooks. This is because the output shape of each stacked block inNDSStagecan be different. For example:from nni.nas.hub.pytorch.nasnet import NDSStageDifferentiable darts_strategy = strategy.DARTS(mutation_hooks=[NDSStageDifferentiable.mutate])

- Parameters:

width – A fixed initial width or a tuple of widths to choose from.

num_cells – A fixed number of cells (depths) to stack, or a tuple of depths to choose from.

dataset – The essential differences are in “stem” cells, i.e., how they process the raw image input. Choosing “imagenet” means more downsampling at the beginning of the network.

auxiliary_loss – If true, another auxiliary classification head will produce the another prediction. This makes the output of network two logits in the training phase.

drop_path_prob – Apply drop path. Enabled when it’s set to be greater than 0.

- ENAS_OPS = ['skip_connect', 'sep_conv_3x3', 'sep_conv_5x5', 'avg_pool_3x3', 'max_pool_3x3']¶

The candidate operations.

AmoebaNet¶

- class nni.nas.hub.pytorch.AmoebaNet(*args, **kwargs)[source]¶

Search space proposed in Regularized evolution for image classifier architecture search.

It is built upon

Cell, and implemented based onNDS. Its operator candidates areAMOEBA_OPS. It has 5 nodes per cell, and the output is concatenation of nodes not used as input to other nodes.Notes

To use NDS spaces with one-shot strategies, especially when depth is mutating (i.e.,

num_cellsis set to a tuple / list), please useNDSStagePathSampling(with ENAS and RandomOneShot) andNDSStageDifferentiable(with DARTS and Proxyless) intomutation_hooks. This is because the output shape of each stacked block inNDSStagecan be different. For example:from nni.nas.hub.pytorch.nasnet import NDSStageDifferentiable darts_strategy = strategy.DARTS(mutation_hooks=[NDSStageDifferentiable.mutate])

- Parameters:

width – A fixed initial width or a tuple of widths to choose from.

num_cells – A fixed number of cells (depths) to stack, or a tuple of depths to choose from.

dataset – The essential differences are in “stem” cells, i.e., how they process the raw image input. Choosing “imagenet” means more downsampling at the beginning of the network.

auxiliary_loss – If true, another auxiliary classification head will produce the another prediction. This makes the output of network two logits in the training phase.

drop_path_prob – Apply drop path. Enabled when it’s set to be greater than 0.

- AMOEBA_OPS = ['skip_connect', 'sep_conv_3x3', 'sep_conv_5x5', 'sep_conv_7x7', 'avg_pool_3x3', 'max_pool_3x3', 'dil_sep_conv_3x3', 'conv_7x1_1x7']¶

The candidate operations.

PNAS¶

- class nni.nas.hub.pytorch.PNAS(*args, **kwargs)[source]¶

Search space proposed in Progressive neural architecture search.

It is built upon

Cell, and implemented based onNDS. Its operator candidates arePNAS_OPS. It has 5 nodes per cell, and the output is concatenation of all nodes in the cell.Notes

To use NDS spaces with one-shot strategies, especially when depth is mutating (i.e.,

num_cellsis set to a tuple / list), please useNDSStagePathSampling(with ENAS and RandomOneShot) andNDSStageDifferentiable(with DARTS and Proxyless) intomutation_hooks. This is because the output shape of each stacked block inNDSStagecan be different. For example:from nni.nas.hub.pytorch.nasnet import NDSStageDifferentiable darts_strategy = strategy.DARTS(mutation_hooks=[NDSStageDifferentiable.mutate])

- Parameters:

width – A fixed initial width or a tuple of widths to choose from.

num_cells – A fixed number of cells (depths) to stack, or a tuple of depths to choose from.

dataset – The essential differences are in “stem” cells, i.e., how they process the raw image input. Choosing “imagenet” means more downsampling at the beginning of the network.

auxiliary_loss – If true, another auxiliary classification head will produce the another prediction. This makes the output of network two logits in the training phase.

drop_path_prob – Apply drop path. Enabled when it’s set to be greater than 0.

- PNAS_OPS = ['sep_conv_3x3', 'sep_conv_5x5', 'sep_conv_7x7', 'conv_7x1_1x7', 'skip_connect', 'avg_pool_3x3', 'max_pool_3x3', 'dil_conv_3x3']¶

The candidate operations.

DARTS¶

- class nni.nas.hub.pytorch.DARTS(*args, **kwargs)[source]¶

Search space proposed in Darts: Differentiable architecture search.

It is built upon

Cell, and implemented based onNDS. Its operator candidates areDARTS_OPS. It has 4 nodes per cell, and the output is concatenation of all nodes in the cell.Note

noneis not included in the operator candidates. It has already been handled in the differentiable implementation of cell.Notes

To use NDS spaces with one-shot strategies, especially when depth is mutating (i.e.,

num_cellsis set to a tuple / list), please useNDSStagePathSampling(with ENAS and RandomOneShot) andNDSStageDifferentiable(with DARTS and Proxyless) intomutation_hooks. This is because the output shape of each stacked block inNDSStagecan be different. For example:from nni.nas.hub.pytorch.nasnet import NDSStageDifferentiable darts_strategy = strategy.DARTS(mutation_hooks=[NDSStageDifferentiable.mutate])

- Parameters:

width – A fixed initial width or a tuple of widths to choose from.

num_cells – A fixed number of cells (depths) to stack, or a tuple of depths to choose from.

dataset – The essential differences are in “stem” cells, i.e., how they process the raw image input. Choosing “imagenet” means more downsampling at the beginning of the network.

auxiliary_loss – If true, another auxiliary classification head will produce the another prediction. This makes the output of network two logits in the training phase.

drop_path_prob – Apply drop path. Enabled when it’s set to be greater than 0.

- DARTS_OPS = ['max_pool_3x3', 'avg_pool_3x3', 'skip_connect', 'sep_conv_3x3', 'sep_conv_5x5', 'dil_conv_3x3', 'dil_conv_5x5']¶

The candidate operations.

ProxylessNAS¶

- class nni.nas.hub.pytorch.ProxylessNAS(*args, **kwargs)[source]¶

The search space proposed by ProxylessNAS.

Following the official implementation, the inverted residual with kernel size / expand ratio variations in each layer is implemented with a

LayerChoicewith all-combination candidates. That means, when used in weight sharing, these candidates will be treated as separate layers, and won’t be fine-grained shared. We note thatMobileNetV3Spaceis different in this perspective.This space can be implemented as part of

MobileNetV3Space, but we separate those following conventions.- Parameters:

num_labels – The number of labels for classification.

base_widths – Widths of each stage, from stem, to body, to head. Length should be 9.

dropout_rate – Dropout rate for the final classification layer.

width_mult – Width multiplier for the model.

bn_eps – Epsilon for batch normalization.

bn_momentum – Momentum for batch normalization.

- class nni.nas.hub.pytorch.proxylessnas.InvertedResidual(in_channels, out_channels, expand_ratio, kernel_size=3, stride=1, squeeze_excite=None, norm_layer=None, activation_layer=None)[source]¶

An Inverted Residual Block, sometimes called an MBConv Block, is a type of residual block used for image models that uses an inverted structure for efficiency reasons.

It was originally proposed for the MobileNetV2 CNN architecture. It has since been reused for several mobile-optimized CNNs. It follows a narrow -> wide -> narrow approach, hence the inversion. It first widens with a 1x1 convolution, then uses a 3x3 depthwise convolution (which greatly reduces the number of parameters), then a 1x1 convolution is used to reduce the number of channels so input and output can be added.

This implementation is sort of a mixture between:

- Parameters:

in_channels (int | MutableExpression[int]) – The number of input channels. Can be a value choice.

out_channels (int | MutableExpression[int]) – The number of output channels. Can be a value choice.

expand_ratio (float | MutableExpression[float]) – The ratio of intermediate channels with respect to input channels. Can be a value choice.

kernel_size (int | MutableExpression[int]) – The kernel size of the depthwise convolution. Can be a value choice.

stride (int) – The stride of the depthwise convolution.

squeeze_excite (Callable[[int | MutableExpression[int], int | MutableExpression[int]], Module] | None) – Callable to create squeeze and excitation layer. Take hidden channels and input channels as arguments.

norm_layer (Callable[[int], Module] | None) – Callable to create normalization layer. Take input channels as argument.

activation_layer (Callable[[...], Module] | None) – Callable to create activation layer. No input arguments.

MobileNetV3Space¶

- class nni.nas.hub.pytorch.MobileNetV3Space(*args, **kwargs)[source]¶

MobileNetV3Space implements the largest search space in TuNAS.

The search dimensions include widths, expand ratios, kernel sizes, SE ratio. Some of them can be turned off via arguments to narrow down the search space.

Different from ProxylessNAS search space, this space is implemented with

ValueChoice.We use the following snipppet as reference. https://github.com/google-research/google-research/blob/20736344591f774f4b1570af64624ed1e18d2867/tunas/mobile_search_space_v3.py#L728

We have

num_blockswhich equals to the length ofself.blocks(the main body of the network). For simplicity, the following parameter specification assumesnum_blocksequals 8 (body + head). If a shallower body is intended, arrays includingbase_widths,squeeze_excite,depth_range,stride,activationshould also be shortened accordingly.- Parameters:

num_labels – Dimensions for classification head.

base_widths – Widths of each stage, from stem, to body, to head. Length should be 9, i.e.,

num_blocks + 1(because there is a stem width in front).width_multipliers – A range of widths multiplier to choose from. The choice is independent for each stage. Or it can be a fixed float. This will be applied on

base_widths, and we would also make sure that widths can be divided by 8.expand_ratios – A list of expand ratios to choose from. Independent for every block.

squeeze_excite – Indicating whether the current stage can have an optional SE layer. Expect array of length 6 for stage 0 to 5. Each element can be one of

force,optional,none.depth_range (List[Tuple[int, int]]) – A range (e.g.,

(1, 4)), or a list of range (e.g.,[(1, 3), (1, 4), (1, 4), (1, 3), (0, 2)]). If a list, the length should be 5. The depth are specified for stage 1 to 5.stride – Stride for all stages (including stem and head). Length should be same as

base_widths.activation – Activation (class) for all stages. Length is same as

base_widths.se_from_exp – Calculate SE channel reduction from expanded (mid) channels.

dropout_rate – Dropout rate at classification head.

bn_eps – Epsilon of batch normalization.

bn_momentum – Momentum of batch normalization.

ShuffleNetSpace¶

- class nni.nas.hub.pytorch.ShuffleNetSpace(*args, **kwargs)[source]¶

The search space proposed in Single Path One-shot.

The basic building block design is inspired by a state-of-the-art manually-designed network – ShuffleNetV2. There are 20 choice blocks in total. Each choice block has 4 candidates, namely

choice 3,choice 5,choice_7andchoice_xrespectively. They differ in kernel sizes and the number of depthwise convolutions. The size of the search space is \(4^{20}\).- Parameters:

num_labels (int) – Number of classes for the classification head. Default: 1000.

channel_search (bool) – If true, for each building block, the number of

mid_channels(output channels of the first 1x1 conv in each building block) varies from 0.2x to 1.6x (quantized to multiple of 0.2). Here, “k-x” means k times the number of default channels. Otherwise, 1.0x is used by default. Default: false.affine (bool) – Apply affine to all batch norm. Default: true.

AutoFormer¶

- class nni.nas.hub.pytorch.AutoFormer(*args, **kwargs)[source]¶

The search space that is proposed in AutoFormer. There are four searchable variables: depth, embedding dimension, heads number and MLP ratio.

- Parameters:

search_embed_dim – The search space of embedding dimension. Use a list to specify search range.

search_mlp_ratio – The search space of MLP ratio. Use a list to specify search range.

search_num_heads – The search space of number of heads. Use a list to specify search range.

search_depth – The search space of depth. Use a list to specify search range.

img_size – Size of input image.

patch_size – Size of image patch.

in_channels – Number of channels of the input image.

num_labels – Number of classes for classifier.

qkv_bias – Whether to use bias item in the qkv embedding.

drop_rate – Drop rate of the MLP projection in MSA and FFN.

attn_drop_rate – Drop rate of attention.

drop_path_rate – Drop path rate.

pre_norm – Whether to use pre_norm. Otherwise post_norm is used.

global_pooling – Whether to use global pooling to generate the image representation. Otherwise the cls_token is used.

absolute_position – Whether to use absolute positional embeddings.

qk_scale – The scaler on score map in self-attention.

rpe – Whether to use relative position encoding.

- classmethod load_pretrained_supernet(name, download=True, progress=True)[source]¶

Load the related supernet checkpoints.

Thanks to the weight entangling strategy that AutoFormer uses, AutoFormer releases a few trained supernet that allows thousands of subnets to be very well-trained. Under different constraints, different subnets can be found directly from the supernet, and used without any fine-tuning.

- Parameters:

name (str) – Search space size, must be one of {‘random-one-shot-tiny’, ‘random-one-shot-small’, ‘random-one-shot-base’}.

download (bool) – Whether to download supernet weights.

progress (bool) – Whether to display the download progress.

- Return type:

The loaded supernet.

- classmethod load_searched_model(name, pretrained=False, download=True, progress=True)[source]¶

Load the searched subnet model.

- Parameters:

name (str) – Search space size, must be one of {‘autoformer-tiny’, ‘autoformer-small’, ‘autoformer-base’}.

pretrained (bool) – Whether initialized with pre-trained weights.

download (bool) – Whether to download supernet weights.

progress (bool) – Whether to display the download progress.

- Returns:

The subnet model.

- Return type:

nn.Module

Module Components¶

Famous building blocks of search spaces.

- class nni.nas.hub.pytorch.modules.AutoActivation(*args, **kwargs)[source]¶

This module is an implementation of the paper Searching for Activation Functions.

- Parameters:

unit_num (int) – The number of core units.

unary_candidates (list[str] | None) – Names of unary candidates. If none, all names from

available_unary_choices()will be used.binary_candidates (list[str] | None) – Names of binary candidates. If none, all names from

available_binary_choices()will be used.label (str | None) – Label of the current module.

Notes

Currently,

beta(in operators likeBinaryParamAdd) is not per-channel parameter.

- class nni.nas.hub.pytorch.modules.NasBench101Cell(*args, **kwargs)[source]¶

Cell structure that is proposed in NAS-Bench-101.

Proposed by NAS-Bench-101: Towards Reproducible Neural Architecture Search.

This cell is usually used in evaluation of NAS algorithms because there is a “comprehensive analysis” of this search space available, which includes a full architecture-dataset that “maps 423k unique architectures to metrics including run time and accuracy”. You can also use the space in your own space design, in which scenario it should be possible to leverage results in the benchmark to narrow the huge space down to a few efficient architectures.

The space of this cell architecture consists of all possible directed acyclic graphs on no more than

max_num_nodesnodes, where each possible node (other than IN and OUT) has one ofop_candidates, representing the corresponding operation. Edges connecting the nodes can be no more thanmax_num_edges. To align with the paper settings, two vertices specially labeled as operation IN and OUT, are also counted intomax_num_nodesin our implementation, the default value ofmax_num_nodesis 7 andmax_num_edgesis 9.Input of this cell should be of shape \([N, C_{in}, *]\), while output should be \([N, C_{out}, *]\). The shape of each hidden nodes will be first automatically computed, depending on the cell structure. Each of the

op_candidatesshould be a callable that accepts computednum_featuresand returns aModule. For example,def conv_bn_relu(num_features): return nn.Sequential( nn.Conv2d(num_features, num_features, 1), nn.BatchNorm2d(num_features), nn.ReLU() )

The output of each node is the sum of its input node feed into its operation, except for the last node (output node), which is the concatenation of its input hidden nodes, adding the IN node (if IN and OUT are connected).

When input tensor is added with any other tensor, there could be shape mismatch. Therefore, a projection transformation is needed to transform the input tensor. In paper, this is simply a Conv1x1 followed by BN and ReLU. The

projectionparameters acceptsin_featuresandout_features, returns aModule. This parameter has no default value, as we hold no assumption that users are dealing with images. An example for this parameter is,def projection_fn(in_features, out_features): return nn.Conv2d(in_features, out_features, 1)

- Parameters:

op_candidates (list of callable) – Operation candidates. Each should be a function accepts number of feature, returning nn.Module.

in_features (int) – Input dimension of cell.

out_features (int) – Output dimension of cell.

projection (callable) – Projection module that is used to preprocess the input tensor of the whole cell. A callable that accept input feature and output feature, returning nn.Module.

max_num_nodes (int) – Maximum number of nodes in the cell, input and output included. At least 2. Default: 7.

max_num_edges (int) – Maximum number of edges in the cell. Default: 9.

label (str) – Identifier of the cell. Cell sharing the same label will semantically share the same choice.

Warning

NasBench101Cellis not supported for graph-based model format. It’s also not supported by most one-shot algorithms currently.

- class nni.nas.hub.pytorch.modules.NasBench201Cell(*args, **kwargs)[source]¶

Cell structure that is proposed in NAS-Bench-201.

Proposed by NAS-Bench-201: Extending the Scope of Reproducible Neural Architecture Search.

This cell is a densely connected DAG with

num_tensorsnodes, where each node is tensor. For every \(i < j\), there is an edge from i-th node to j-th node. Each edge in this DAG is associated with an operation transforming the hidden state from the source node to the target node. All possible operations are selected from a predefined operation set, defined inop_candidates. Each of theop_candidatesshould be a callable that accepts input dimension and output dimension, and returns aModule.Input of this cell should be of shape \([N, C_{in}, *]\), while output should be \([N, C_{out}, *]\). For example,

The space size of this cell would be \(|op|^{N(N-1)/2}\), where \(|op|\) is the number of operation candidates, and \(N\) is defined by

num_tensors.- Parameters:

op_candidates (list of callable) – Operation candidates. Each should be a function accepts input feature and output feature, returning nn.Module.

in_features (int) – Input dimension of cell.

out_features (int) – Output dimension of cell.

num_tensors (int) – Number of tensors in the cell (input included). Default: 4

label (str) – Identifier of the cell. Cell sharing the same label will semantically share the same choice.

Evaluator¶

- class nni.nas.evaluator.FunctionalEvaluator(function, **kwargs)[source]¶

Functional evaluator that directly takes a function and thus should be general. See

Evaluatorfor instructions on how to write this function.- function¶

The full name of the function.

- arguments¶

Keyword arguments for the function other than model.

- class nni.nas.evaluator.Evaluator[source]¶

Base class of evaluator.

To users, the evaluator is to assess the quality of a model and return a score. When an evaluator is defined, it usually accepts a few arguments, such as basic runtime information (e.g., whether to use GPU), dataset used, as well as hyper-parameters (such as learning rate). These parameters can be sometimes tunable and searched by algorithms (see

MutableEvaluator).Different evaluators could have different use scenarios and requirements on the model. For example,

Classificationis tailored for classification models, and assumes the model has aforwardmethod that takes a batch of data and returns logits. Evaluators might also have different assumptions, some of which are requirements of certain algorithms. The evaluator with the most freedom isFunctionalEvaluator, but it’s also incompatible with some algorithms.To developers, the evaluator is to implement all the logics involving forward/backward of neural networks. Sometimes the algorithm requires the training and searching at the same time (e.g., one-shot algos). In that case, although the searching part doesn’t logically belong to the evaluator, it is still the evaluator’s responsibility to implement it, and the search algorithms will make sure to properly manipulate the evaluator to achieve the goal.

Tip

Inside evaluator, you can use standard NNI trial APIs to communicate with the exploration strategy. Common usages include:

Use

nni.get_current_parameter()to get the currentExecutableModelSpace. Notice thatExecutableModelSpaceis not a directly-runnable model (e.g., a PyTorch model), which is different from the model received inevaluate().ExecutableModelSpaceobjects are useful for debugging, as well as for some evaluators which need to know extra details about how the model is sampled.Use

nni.report_intermediate_result()to report intermediate results.Use

nni.report_final_result()to report final results.

These APIs are only available when the evaluator is executed by NNI. We recommend using

nni.get_current_parameter() is not Noneto check if the APIs are available before using them. Please AVOID usingnni.get_next_parameter()because NAS framework has already handled the logic of retrieving the next parameter. Incorrectly usingnni.get_next_parameter()may cause unexpected behavior.- evaluate(model)[source]¶

To run evaluation of a model. The model is usually a concrete model. The return value of

evaluate()can be anything. Typically it’s used for test purposes.Subclass should override this.

- static mock_runtime(model)[source]¶

Context manager to mock trial APIs for standalone usage.

Under the with-context of this method,

nni.get_current_parameter()will return the given model.NOTE: This method might become a utility in trial command channel in future.

- Parameters:

model (ExecutableModelSpace) – The model to be evaluated. It should be a

ExecutableModelSpaceobject.

Examples

This method should be mostly used when testing a evaluator. A typical use case is as follows:

>>> frozen_model = model_space.freeze(sample) >>> with evaluator.mock_runtime(frozen_model): ... evaluator.evaluate(frozen_model.executable_model())

- class nni.nas.evaluator.MutableEvaluator[source]¶

Evaluators with tunable parameters by itself (e.g., learning rate).

The tunable parameters must be an argument of the evaluator’s instantiation, or an argument of the arguments’ instantiation and etc.

To use this feature, there are two requirements:

The evaluator must inherit

MutableEvaluatorrather thanEvaluator.Make sure the init arguments have been saved in

trace_kwargs, and the instance can be cloned withtrace_copy. The easiest way is to wrap the evaluator withnni.trace(). If the mutable parameter exists somewhere in the nested instantiation. All the levels must all be wrapped withnni.trace().

Examples

>>> def get_data(shuffle): ... ... >>> @nni.trace # 1. must wrap here ... class MyOwnEvaluator(MutableEvaluator): # 2. must inherit MutableEvaluator ... def __init__(self, lr, data): ... ... >>> evaluator = MyOwnEvaluator( ... lr=Categorical([0.1, 0.01]), # the argument can be tunable ... data=nni.trace(get_data)( # if there is mutable parameters inside, this must also have nni.trace ... shuffle=Categorical([False, True]) ... ) ... ) >>> evaluator.simplify() {'global/1': Categorical([0.1, 0.01], label='global/1'), 'global/2': Categorical([False, True], label='global/2')}

- freeze(sample)[source]¶

Upon freeze,

MutableEvaluatorwill freeze all the mutable parameters (as well as nested parameters), and return aFrozenEvaluator.The evaluator will not be fully initialized to save the memory, especially when parameters contain large objects such as datasets. To use the evaluator, call

FrozenEvaluator.get()to get the full usable evaluator.- Return type:

The frozen evaluator.

- class nni.nas.evaluator.pytorch.Classification(*args, **kwargs)[source]¶

Evaluator that is used for classification.

Available callback metrics in

Classificationare:train_loss

train_acc

val_loss

val_acc

- Parameters:

criterion (nn.Module) – Class for criterion module (not an instance). default:

nn.CrossEntropyLosslearning_rate (float) – Learning rate. default: 0.001

weight_decay (float) – L2 weight decay. default: 0

optimizer (Optimizer) – Class for optimizer (not an instance). default:

Adamtrain_dataloaders (DataLoader) – Used in

trainer.fit(). A PyTorch DataLoader with training samples. If thelightning_modulehas a predefined train_dataloader method this will be skipped.val_dataloaders (DataLoader or List of DataLoader) – Used in

trainer.fit(). Either a single PyTorch Dataloader or a list of them, specifying validation samples. If thelightning_modulehas a predefined val_dataloaders method this will be skipped.datamodule (LightningDataModule | None) – Used in

trainer.fit(). See Lightning DataModule.export_onnx (bool) – If true, model will be exported to

model.onnxbefore training starts. default truenum_classes (int) – Number of classes for classification task. Required for torchmetrics >= 0.11.0. default: None

trainer_kwargs (dict) – Optional keyword arguments passed to trainer. See Lightning documentation for details.

Examples

>>> evaluator = Classification()

To use customized criterion and optimizer:

>>> evaluator = Classification(nn.LabelSmoothingCrossEntropy, optimizer=torch.optim.SGD)

Extra keyword arguments will be passed to trainer, some of which might be necessary to enable GPU acceleration:

>>> evaluator = Classification(accelerator='gpu', devices=2, strategy='ddp')

- class nni.nas.evaluator.pytorch.Regression(*args, **kwargs)[source]¶

Evaluator that is used for regression.

Available callback metrics in

Regressionare:train_loss

train_mse

val_loss

val_mse

- Parameters:

criterion (nn.Module) – Class for criterion module (not an instance). default:

nn.MSELosslearning_rate (float) – Learning rate. default: 0.001

weight_decay (float) – L2 weight decay. default: 0

optimizer (Optimizer) – Class for optimizer (not an instance). default:

Adamtrain_dataloaders (DataLoader) – Used in

trainer.fit(). A PyTorch DataLoader with training samples. If thelightning_modulehas a predefined train_dataloader method this will be skipped.val_dataloaders (DataLoader or List of DataLoader) – Used in

trainer.fit(). Either a single PyTorch Dataloader or a list of them, specifying validation samples. If thelightning_modulehas a predefined val_dataloaders method this will be skipped.datamodule (LightningDataModule | None) – Used in

trainer.fit(). See Lightning DataModule.export_onnx (bool) – If true, model will be exported to

model.onnxbefore training starts. default: truetrainer_kwargs (dict) – Optional keyword arguments passed to trainer. See Lightning documentation for details.

Examples

>>> evaluator = Regression()

Extra keyword arguments will be passed to trainer, some of which might be necessary to enable GPU acceleration:

>>> evaluator = Regression(gpus=1)

- class nni.nas.evaluator.pytorch.Trainer(*args, **kwargs)[source]¶

Traced version of

pytorch_lightning.Trainer. See https://pytorch-lightning.readthedocs.io/en/stable/common/trainer.html

- class nni.nas.evaluator.pytorch.DataLoader(*args, **kwargs)[source]¶

Traced version of

torch.utils.data.DataLoader. See https://pytorch.org/docs/stable/data.html

- class nni.nas.evaluator.pytorch.Lightning(*args, **kwargs)[source]¶

Delegate the whole training to PyTorch Lightning.

Since the arguments passed to the initialization needs to be serialized,

LightningModule,TrainerorDataLoaderin this file should be used. Another option is to hide dataloader in the Lightning module, in which case, dataloaders are not required for this class to work.Following the programming style of Lightning, metrics sent to NNI should be obtained from

callback_metricsin trainer. Two hooks are added at the end of validation epoch and the end offit, respectively. The metric name and type depend on the specific task.Warning

The Lightning evaluator are stateful. If you try to use a previous Lightning evaluator, please note that the inner

lightning_moduleandtrainerwill be reused.- Parameters:

lightning_module (LightningModule) – Lightning module that defines the training logic.

trainer (Trainer) – Lightning trainer that handles the training.

train_dataloders – Used in

trainer.fit(). A PyTorch DataLoader with training samples. If thelightning_modulehas a predefined train_dataloader method this will be skipped. It can be any types of dataloader supported by Lightning.val_dataloaders (Any | None) – Used in

trainer.fit(). Either a single PyTorch Dataloader or a list of them, specifying validation samples. If thelightning_modulehas a predefined val_dataloaders method this will be skipped. It can be any types of dataloader supported by Lightning.datamodule (LightningDataModule | None) – Used in

trainer.fit(). See Lightning DataModule.fit_kwargs (Dict[str, Any] | None) – Keyword arguments passed to

trainer.fit().detect_interrupt (bool) – Lightning has a graceful shutdown mechanism. It does not terminate the whole program (but only the training) when a KeyboardInterrupt is received. Setting this to

Truewill raise the KeyboardInterrupt to the main process, so that the whole program can be terminated.

Examples

Users should define a Lightning module that inherits

LightningModule, and useTrainerandDataLoaderfrom`nni.nas.evaluator.pytorch, and make them parameters of this evaluator:import nni from nni.nas.evaluator.pytorch.lightning import Lightning, LightningModule, Trainer, DataLoader

- class nni.nas.evaluator.pytorch.LightningModule(*args, **kwargs)[source]¶

Basic wrapper of generated model. Lightning modules used in NNI should inherit this class.

It’s a subclass of

pytorch_lightning.LightningModule. See https://pytorch-lightning.readthedocs.io/en/stable/common/lightning_module.htmlSee

SupervisedLearningModuleas an example.- property model: Module¶

The inner model (architecture) to train / evaluate.

It will be only available after calling

set_model().

Multi-trial strategy¶

- class nni.nas.strategy.GridSearch(*, shuffle=True, seed=None, dedup=True)[source]¶

Traverse the search space and try all the possible combinations one by one.

- Parameters:

shuffle (bool) – Shuffle the order in a candidate list, so that they are tried in a random order. Currently, the implementation is a pseudo-random shuffle, which only shuffles the order of every 100 candidates.

seed (int | None) – Random seed.

- class nni.nas.strategy.Random(*, dedup=True, seed=None, **kwargs)[source]¶

Random search on the search space.

- Parameters:

dedup (bool) – Do not try the same configuration twice.

seed (int | None) – Random seed.

- class nni.nas.strategy.RegularizedEvolution(*, population_size=100, sample_size=25, mutation_prob=0.05, crossover=False, dedup=True, seed=None, **kwargs)[source]¶

Algorithm for regularized evolution (i.e. aging evolution). Follows “Algorithm 1” in Real et al. “Regularized Evolution for Image Classifier Architecture Search”, with several enhancements.

Sample in this algorithm are called individuals. Specifically, the first

population_sizeindividuals are randomly sampled from the search space, and the rest are generated via a selection and mutation process. While new individuals are added to the population, the oldest one is removed to keep the population size constant.- Parameters:

population_size (int) – The number of individuals to keep in the population.

sample_size (int) – The number of individuals that should participate in each tournament. When mutate,

sample_sizeindividuals can randomly selected from the population, and the best one among them will be treated as the parent.mutation_prob (float) – Probability that mutation happens in each dim.

crossover (bool) – If

True, the new individual will be a crossover between winners of two individual tournament. That means, two sets ofsample_sizeindividuals will be randomly selected from the population, and the best one in each set will be used as parents. Every dimension will be randomly selected from one of the parents.dedup (bool) – Enforce one sample to never appear twice. The population might be smaller than

population_sizeif this is set toTrueand the search space is small.seed (int | None) – Random seed.

- class nni.nas.strategy.PolicyBasedRL(*, samples_per_update=20, replay_buffer_size=None, reward_for_invalid=None, policy_fn=None, update_kwargs=None, **kwargs)[source]¶

Algorithm for policy-based reinforcement learning. This is a wrapper of algorithms provided in tianshou (PPO by default), and can be easily customized with other algorithms that inherit

BasePolicy(e.g., REINFORCE as in this paper).- Parameters:

samples_per_update (int) – How many models (trajectories) each time collector collects. After each collect, trainer will sample batch from replay buffer and do the update.

replay_buffer_size (int | None) – Size of replay buffer. If it’s none, the size will be the expected trajectory length times

samples_per_update.reward_for_invalid (float | None) – The reward for a sample that didn’t pass validation, or the training doesn’t return a metric. If not provided, failed models will be simply ignored as if nothing happened.

policy_fn (Optional[PolicyFactory]) – Since environment is created on the fly, the policy needs to be a factory function that creates a policy on-the-fly. It takes

TuningEnvironmentas input and returns a policy. By default, it will use the policy returned bydefault_policy_fn().update_kwargs (dict | None) – Keyword arguments for

policy.update. See tianshou’s BasePolicy for details. There is a special key"update_times"that can be used to specify how many timespolicy.updateis called, which can be used to sufficiently exploit the current available trajectories in the replay buffer (for example when actor and critic needs to be updated alternatively multiple times). By default, it’s{'batch_size': 32, 'repeat': 5, 'update_times': 5}.

- class nni.nas.strategy.TPE(*args, **kwargs)[source]¶

The Tree-structured Parzen Estimator (TPE) is a sequential model-based optimization (SMBO) approach.

Find the details in Algorithms for Hyper-Parameter Optimization.

SMBO methods sequentially construct models to approximate the performance of hyperparameters based on historical measurements, and then subsequently choose new hyperparameters to test based on this model.

Advanced APIs¶

Base¶

- class nni.nas.strategy.base.Strategy(model_space=None, engine=None)[source]¶

Base class for NAS strategies.

To explore a space with a strategy, use:

strategy = MyStrategy() strategy(model_space, engine)

The strategy has a

run()method, that defines the process of exploring a NAS space.Strategy is stateful. It might store information of the current

initialize()andrun()as member attributes. We do not allowrun()a strategy twice with same, or different model spaces.Subclass should override

_initialize()and_run(), as well asstate_dict()andload_state_dict()for checkpointing.- property engine: ExecutionEngine¶

Strategy should use

engineto submit models, listen to metrics, and do budget / concurrency control.The engine is set by

set_engine(), either manually, or by a NAS experiment.The engine could be either a real engine, or a middleware that wraps a real engine. It doesn’t make any difference because their interface are the same.

See also

- initialize(model_space, engine)[source]¶

Initialize the strategy.

This method should be called before

run()to initialize some states.Some strategies might even mutate the

model_space. They should return the mutated model space.load_state_dict()can be called afterinitialize()to restore the state of the strategy.Subclass override

_initialize()instead of this method.

- list_models(sort=True, limit=None)[source]¶

List all the models that is ever searched by the engine.

A typical use case of this is to get the top-performing models produced during

run().The default implementation uses

list_models()to retrieve a list of models from the execution engine.- Parameters:

sort (bool) – Whether to sort the models by their metric (in descending order). If sorted is true, only models with “Trained” status and non-

Nonemetric are returned.limit (int | None) – Limit the number of models to return.

- Return type:

An iterator of models.

- load_state_dict(state_dict)[source]¶

Load the state of the strategy. This is used for loading checkpoints.

The state of strategy is some variables that are related to the current exploration process. The loading is often done after

initialize()and beforerun().

- property model_space: ExecutableModelSpace¶

The model space that strategy is currently exploring.

It should be the same one as the input argument of

run(), but the property exists for convenience.See also

- run()[source]¶

Explore the model space.

This should be the main part of a NAS experiment. Strategies decide how to explore the model space. They can submit models to

enginefor training and evaluation.The strategy doesn’t have to wait for all the models it submits to finish training.

The caller of

run()is responsible of setting theengineandmodel_spacebefore callingrun().Subclass override

_run()instead of this method.

- class nni.nas.strategy.base.StrategyStatus(value)[source]¶

Status of a strategy.

A strategy is in one of the following statuses:

EMPTY: The strategy is not initialized.INITIALIZED: The strategy is initialized (with a model space), but not started.RUNNING: The strategy is running.SUCCEEDED: The strategy has successfully ended.INTERRUPTED: The strategy is interrupted.FAILED: The strategy is stopped due to error.

Middleware¶

- class nni.nas.strategy.middleware.Chain(strategy, *middlewares)[source]¶

Chain a

Strategy(main strategy) with severalStrategyMiddleware.All the communications between strategy and execution engine will pass through the chain of middlewares. For example, when the strategy submits a model, it will be handled by the middleware, which decides whether to hand over to the next middleware, or to manipulate, or even block the model. The last middleware is connected to the real execution engine (which might be also guarded by a few middlewares).

- Parameters:

strategy (Strategy) – The main strategy. There can be exactly one strategy which is submitting models actively, which is therefore called main strategy.

*middlewares (StrategyMiddleware) – A chain of middlewares. At least one.

See also

- class nni.nas.strategy.middleware.Deduplication(action, patience=1000, retain_history=True)[source]¶

This middleware is able to deduplicate models that are submitted by strategies.

When duplicated models are found, the middleware can be configured to, either mark the model as invalid, or find the metric of the model from history and “replay” the metrics. Regardless of which action is taken, the patience counter will always increase, and when it runs out, the middleware will say there is no more budget.

Notice that some strategies have already provided deduplication on their own, e.g.,

Random. This class is to help those strategies who do NOT have the ability of deduplication.- Parameters:

action (Literal['invalid', 'replay']) – What to do when a duplicated model is found.

invalidmeans to mark the model as invalid, whilereplaymeans to retrieve the metric of the previous same model from the engine.patience (int) – Number of continuous duplicated models received until the middleware reports no budget.

retain_history (bool) – To record all the duplicated models even if there are not submitted to the underlying engine. While turning this off might lose part of the submitted model history, it will also reduce the memory cost.

- class nni.nas.strategy.middleware.FailureHandler(*, metric=None, retry_patience=None, failure_types=(ModelStatus.Failed,), retain_history=True)[source]¶

This middleware handles failed models.

The handler supports two modes:

Retry mode: to re-submit the model to the engine, until the model succeeds or patience runs out.

Metric mode: to send a metric for the model, so that the strategy gets penalized for generating this model.

“Failure” doesn’t necessarily mean it has to be the “Failed” state. It can be other types such as “Invalid”, or “Interrupted”, etc. The middleware can thus be chained with other middlewares (e.g.,

Filter), to retry (or put metrics) on invalid models:strategy = Chain( RegularizedEvolution(), FailureHandler(metric=-1.0, failure_types=(ModelStatus.Invalid, )), Filter(filter_fn=custom_constraint) )

- Parameters:

metric (TrialMetric | None) – The metric to send when the model failed. Implies metric mode.

retry_patience (int | None) – Maximum number times of retires. Implies retry mode.

metricandretry_patiencecan’t be both set and can’t be both unset. Exactly one of them must be set.failure_types (tuple[ModelStatus, ...]) – A tuple of

ModelStatus, indicating a set of status that are considered failure.retain_history (bool) – Only has effect in retry mode. If set to

True, submitted models will be kept in a dedicated place, separated from retried models. Otherwise,list_models()might return both submitted models and retried models.

- class nni.nas.strategy.middleware.Filter(filter_fn, metric_for_invalid=None, patience=1000, retain_history=True)[source]¶

Block certain models from submitting.

When models are submitted, they will go through the filter function, to check their validity. If the function returns true, the model will be submitted as usual. Otherwise, the model will be immediately marked as invalid (and optionally have a metric to penalize the strategy).

We recommend to use this middleware to check certain constraints, or prevent the training of some bad models from happening.

- Parameters:

filter_fn (Callable[[ExecutableModelSpace], bool]) – The filter function. Input argument is a

ExecutableModelSpace. ReturningTruemeans the model is good to submit.metric_for_invalid (TrialMetric | None) – When setting to be not None, the metric will be assigned to invalid models. Otherwise, no metric will be set.

patience (int) – Number of continuous invalid models received until the middleware reports no budget.

retain_history (bool) – To faithfully record all the submitted models including the invalid ones. Setting this to false would lose the record of the invalid models, but will also be more memory-efficient. Note that the history can NOT be recovered upon

load_state_dict().

Examples

With

Filter, it becomes a lot easier to have some customized controls for the built-in strategies.For example, if I have a fancy estimator that can tell whether a model’s accuracy is above 90%, and I don’t want any model below 90% submitted for training, I can do:

def some_fancy_estimator(model) -> bool: # return True or False ... strategy = Chain( RegularizedEvolution(), Filter(some_fancy_estimator) )

If the estimator returns false, the model will be immediately marked as invalid, and will not run.

- class nni.nas.strategy.middleware.MedianStop[source]¶

Kill a running model when its best intermediate result so far is worse than the median of results of all completed models at the same number of intermediate reports.

Follow the mechanism in

MedianstopAssessorto stop trials.Warning

This only works theoretically. It can’t be used because engine doesn’t have the ability to kill a model currently.

- class nni.nas.strategy.middleware.MultipleEvaluation(repeat, retain_history=True)[source]¶

Runs each model for multiple times, and use the averaged metric as the final result.

This is useful in scenarios where model evaluation is unstable, with randomness (e.g., Reinforcement Learning).

When models are submitted, replicas of the models will be created (via deepcopy). See

submit_models(). The intermediate metrics, final metric, as well as status will be reduced in their arriving order. For example, the first intermediate metric reported by all replicas will be gathered and averaged, to be the first intermediate metric of the submitted original model. Similar for final metric and status. The status is only considered successful when all the replicas have a successful status. Otherwise, the first unsuccessful status of replicas will be used as the status of the original model.- Parameters:

repeat (int) – How many times to evaluate each model.

retain_history (bool) – If

True, keep all the submitted original models in memory. Otherwiselist_models()will return the replicated models, which, on the other hand saves some memory.

- submit_models(*models)[source]¶

Submit the models.

The method will replicate the models by

repeatnumber of times. If multiple models are submitted simultaneously, the models will be submitted replica by replica. For example, three models are submitted and they are repeated two times, the submitting order will be: model1, model2, model3, model1, model2, model3.Warning

This method might exceed the budget of the underlying engine, even if the budget shows available when the strategy submits.

This method will ignore a model if the model’s replicas is current running.

- class nni.nas.strategy.middleware.StrategyMiddleware(model_space=None, engine=None)[source]¶

StrategyMiddlewareintercepts the models, and strategically filters, mutates, or replicates them and submits them to the engine. It can also intercept the metrics reported by the engine, and manipulates them.StrategyMiddlewareis often used together withChain, which chains a main strategy and a list of middlewares. When a model is created by the main strategy, it is passed to the middlewares in order, during which each middleware have access to the model, and pass it to the next middleware. The metric does quite the opposite, i.e., it is passed from the engine, through all the middlewares, and all the way back to the main strategy.We refer to the middleware closest to the main strategy as upper-level middleware, as it exists at the upper level of the calling stack. Conversely, we refer to the middleware closest to the engine as lower-level middleware.

- property model_space: ExecutableModelSpace¶

Model space is useful for the middleware to do advanced things, e.g., sample its own models.

The model space is set by whoever uses the middleware, before the strategy starts to run.

Utilities¶

- class nni.nas.strategy.utils.DeduplicationHelper(raise_on_dup=False)[source]¶

Helper class to deduplicate samples.

Different from the deduplication on the HPO side, this class simply checks if a sample has been tried before, and does nothing else.

- dedup(sample)[source]¶

If the new sample has not been seen before, it will be added to the history and return True. Otherwise, return False directly.

If raise_on_dup is true, a

DuplicationErrorwill be raised instead of returning False.

- exception nni.nas.strategy.utils.DuplicationError(sample)[source]¶

Exception raised when a sample is duplicated.

- class nni.nas.strategy.utils.RetrySamplingHelper(retries=500, exception_types=(<class 'nni.mutable.exception.SampleValidationError'>, ), raise_last=False)[source]¶

Helper class to retry a function until it succeeds.

Typical use case is to retry random sampling until a non-duplicate / valid sample is found.

- Parameters:

retries (int) – Number of retries.

exception_types (tuple[Type[Exception]]) – Exception types to catch.

raise_last (bool) – Whether to raise the last exception if all retries failed.

One-shot strategies¶

- class nni.nas.strategy.RandomOneShot(filter=None, **kwargs)[source]¶

Train a super-net with uniform path sampling. See reference.

In each step, model parameters are trained after a uniformly random sampling of each choice. Notably, the exporting result is also a random sample of the search space.

The supported mutation primitives of RandomOneShot are:

nni.nas.nn.pytorch.ParametrizedModule(only when parameters’ type is in MutableLinear, MutableConv2d, MutableBatchNorm2d, MutableLayerNorm, MutableMultiheadAttention).

This strategy assumes inner evaluator has set automatic optimization to true.

- Parameters:

filter (ProfilerFilter | dict | Callable[[Sample], bool] | None) – A function that takes a sample and returns a boolean. We recommend using

ProfilerFilterto filter samples. If it’s a dict of keys ofprofiler, and either (or both) ofminandmax, it will be used to construct aRangeProfilerFilter.**kwargs – Parameters for

BaseOneShotStrategy.

Examples

This strategy is mostly used as a “pre”-strategy to speedup another multi-trial strategy. The multi-trial strategy can leverage the trained weights from

RandomOneShotsuch that each sampled model won’t need to be trained from scratch. See SPOS, OFA and AutoFormer for how this is done in the arts.A typical workflow looks like follows: