SlimPruner on NNI Compressor¶

1. Slim Pruner¶

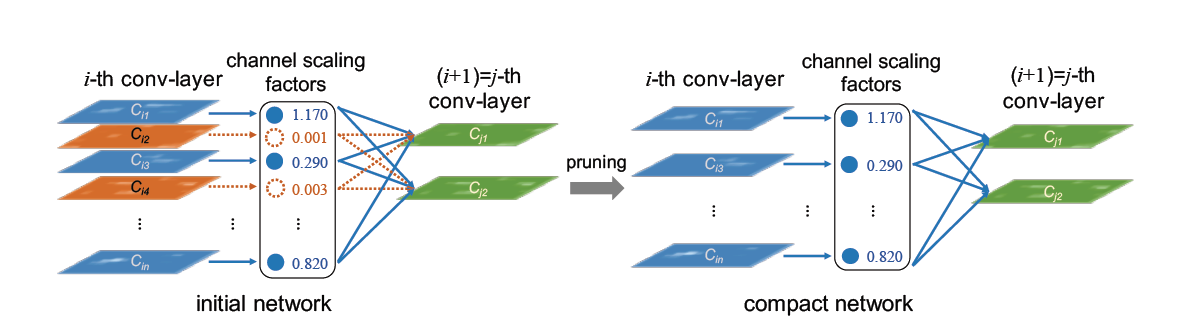

SlimPruner is a structured pruning algorithm for pruning channels in the convolutional layers by pruning corresponding scaling factors in the later BN layers.

In ‘Learning Efficient Convolutional Networks through Network Slimming’, authors Zhuang Liu, Jianguo Li, Zhiqiang Shen, Gao Huang, Shoumeng Yan and Changshui Zhang.

Slim Pruner prunes channels in the convolution layers by masking corresponding scaling factors in the later BN layers, L1 regularization on the scaling factors should be applied in batch normalization (BN) layers while training, scaling factors of BN layers are globally ranked while pruning, so the sparse model can be automatically found given sparsity.

2. Usage¶

PyTorch code

from nni.compression.torch import SlimPruner

config_list = [{ 'sparsity': 0.8, 'op_types': ['BatchNorm2d'] }]

pruner = SlimPruner(model, config_list)

pruner.compress()

User configuration for Filter Pruner¶

- sparsity: This is to specify the sparsity operations to be compressed to

- op_types: Only BatchNorm2d is supported in Slim Pruner

3. Experiment¶

We implemented one of the experiments in ‘Learning Efficient Convolutional Networks through Network Slimming’, we pruned $70%$ channels in the VGGNet for CIFAR-10 in the paper, in which $88.5%$ parameters are pruned. Our experiments results are as follows:

| Model | Error(paper/ours) | Parameters | Pruned |

|---|---|---|---|

| VGGNet | 6.34/6.40 | 20.04M | |

| Pruned-VGGNet | 6.20/6.26 | 2.03M | 88.5% |

The experiments code can be found at examples/model_compress